Lately I’ve been studying generative models to build something with them. This post explains the basics of a foundational approach—Generative Adversarial Networks (GANs)—and then walks through a simple implementation with TensorFlow.

Since GANs are a kind of generative model, let’s briefly review generative models first.

What is a generative model?

A generative model outputs data such as photos, illustrations, or music.

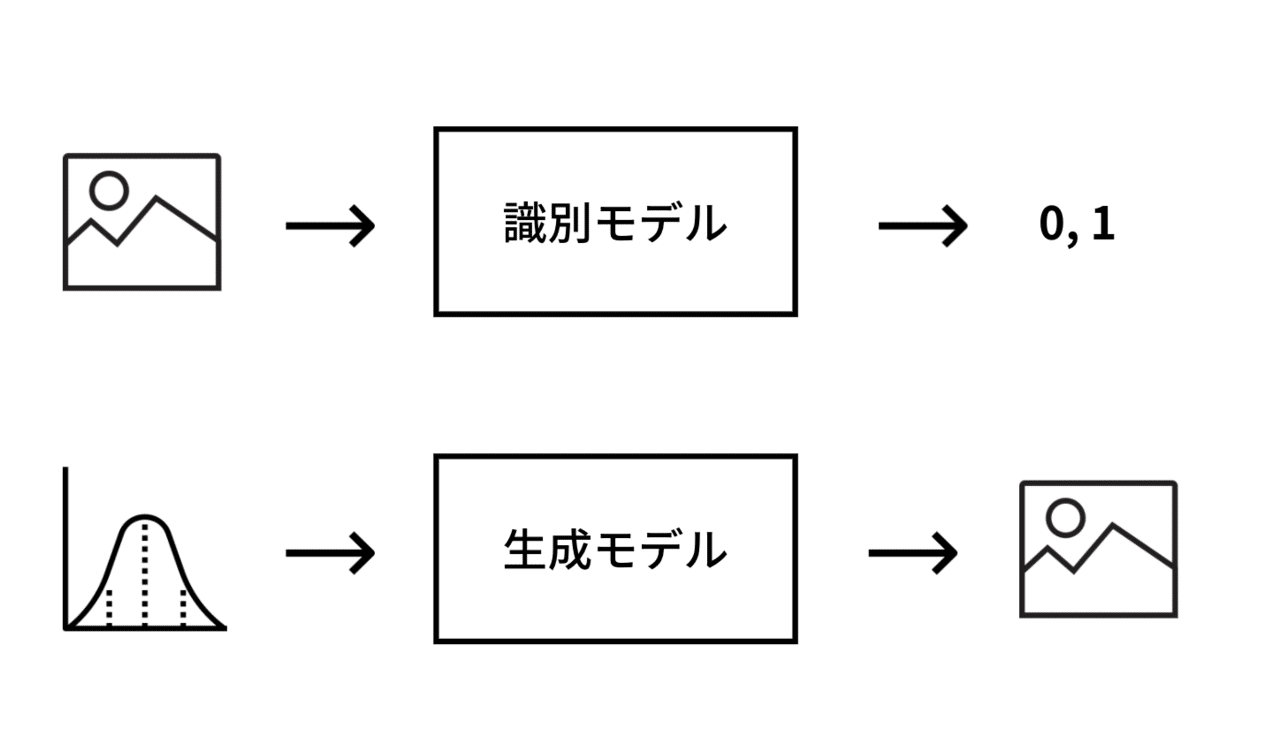

Machine learning is often used for tasks like recognizing what’s in an image, classifying true/false, or predicting numeric values—these are typically discriminative tasks. For binary classification, the output is a label like 0 or 1.

Generative models differ: they take a random vector as input and output data (e.g., an image).

They literally create data that didn’t exist before—very “AI‑like”.

What is a GAN?

Among generative models, GANs have been particularly successful in recent years.

Modern GANs combine generative modeling with deep learning to produce high‑fidelity outputs. For example, here is an image generated by StyleGAN—it’s remarkably photo‑realistic.

GAN basics

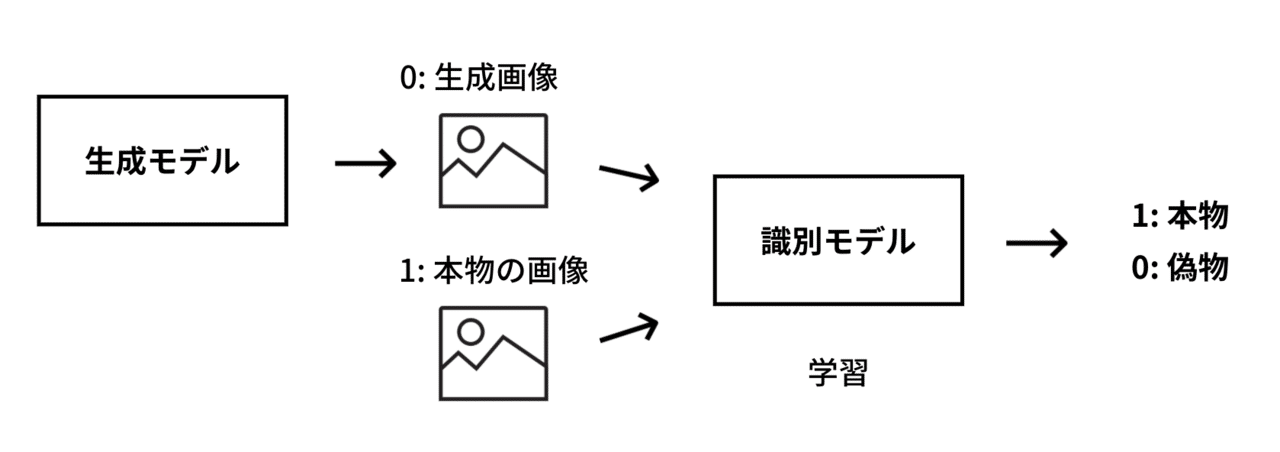

GANs pair two models: a generator, which produces data, and a discriminator, which classifies real vs. fake. They are trained together.

The discriminator learns to tell real data (label 1) from generated (label 0):

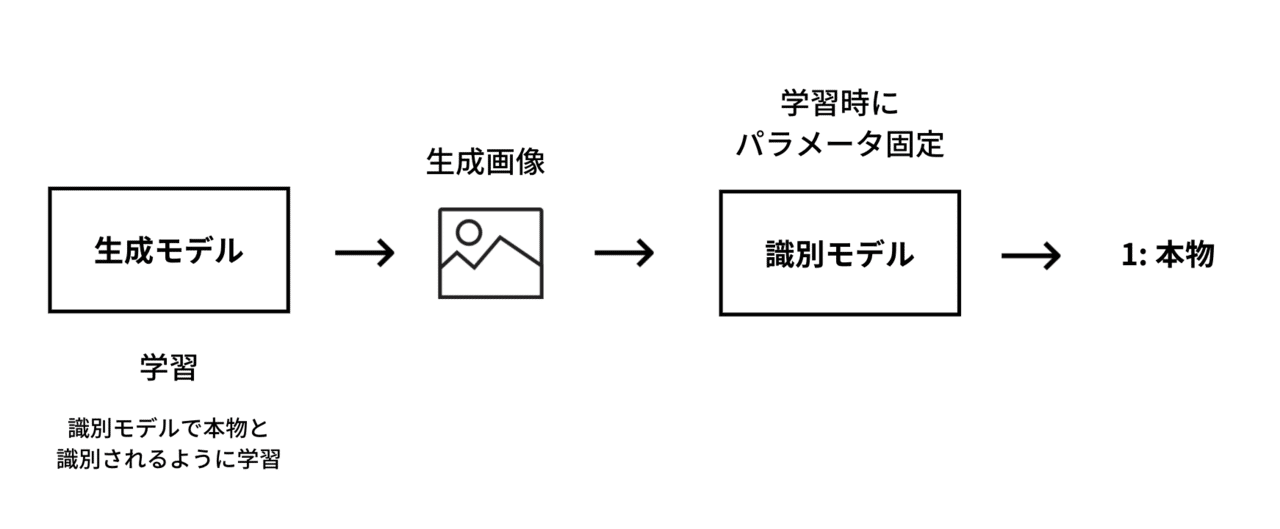

The generator takes a random vector and is trained through the discriminator so that its outputs are judged “real”. In other words, the generator learns to produce images that fool the discriminator.

Important: when training the generator, do not update the discriminator’s parameters. We want the discriminator to remain a strict judge; if it changes during generator training, it becomes too lenient.

Implementing a GAN with TensorFlow

We’ll implement a DCGAN in TensorFlow. Training takes time, so use a GPU if possible. Google Colaboratory provides free GPUs:

We’ll proceed as follows:

- (1) Load data

- (2) Build the generator

- (3) Build the discriminator

- (4) Compile the model

- (5) Train the model

The runnable notebook is published here:

(1) Load data

Use the MNIST dataset that ships with TensorFlow. The tensors come as (60000, 28, 28); reshape to (28, 28, 1) for the model.

from tensorflow.keras.datasets import mnist

(x_train, y_train), (x_test, y_test) = mnist.load_data()

x_train = x_train.reshape(-1, 28, 28, 1)

x_test = x_test.reshape(-1, 28, 28, 1)MNIST is 0–255 ints; scale inputs to [-1, 1] for stable training:

x_train = (x_train - 127.5) / 127.5

x_test = (x_test - 127.5) / 127.5(2) Build the generator

Using Keras’s Functional API:

from tensorflow.keras.models import Model

from tensorflow.keras.layers import Input, \

Dense, Conv2D, LeakyReLU, BatchNormalization, \

Reshape, UpSampling2D, ActivationConstants:

kernel_size = 5

noize_vector_dim = 100We want 28×28 outputs, so choose an input spatial size that doubles cleanly via upsampling layers.

Upsampling repeats rows/columns to enlarge feature maps.

... (Define generator and discriminator architectures and training loop as in the notebook.)

Summary

We reviewed how GANs work and implemented a minimal DCGAN in TensorFlow. With a GPU and enough iterations, you should see the generator progressively produce more realistic digits.